相比于之前写的ResNet18,下面的ResNet50写得更加工程化一点,这还适用与其他分类。

我的代码文件结构

1. 数据处理

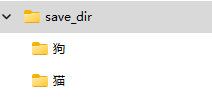

首先已经对数据做好了分类

文件夹结构是这样

开始划分数据集

split_data.py

- import os

- import random

- import shutil

- def move_file(target_path, save_train_path, save_val_pathm, scale=0.1):

- file_list = os.listdir(target_path)

- random.shuffle(file_list)

- number = int(len(file_list) * scale)

- train_list = file_list[number:]

- val_list = file_list[:number]

- for file in train_list:

- target_file_path = os.path.join(target_path, file)

- save_file_path = os.path.join(save_train_path, file)

- shutil.copyfile(target_file_path, save_file_path)

- for file in val_list:

- target_file_path = os.path.join(target_path, file)

- save_file_path = os.path.join(save_val_pathm, file)

- shutil.copyfile(target_file_path, save_file_path)

- def split_classify_data(base_path, save_path, scale=0.1):

- folder_list = os.listdir(base_path)

- for folder in folder_list:

- target_path = os.path.join(base_path, folder)

- save_train_path = os.path.join(save_path, 'train', folder)

- save_val_path = os.path.join(save_path, 'val', folder)

- if not os.path.exists(save_train_path):

- os.makedirs(save_train_path)

- if not os.path.exists(save_val_path):

- os.makedirs(save_val_path)

- move_file(target_path, save_train_path, save_val_path, scale)

- print(folder, 'finish!')

- if __name__ == '__main__':

- base_path = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\save_dir'

- save_path = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\dog_cat'

- # 验证集比例

- scale = 0.1

- split_classify_data(base_path, save_path, scale)

运行完以上代码的到的文件夹结构

一个训练集数据,一个验证集数据

2.数据集的导入

我这个文件写了一个数据集的导入和一个学习率更新的函数。数据导入是通用的

tools.py

- import os

- import time

- import cv2

- import numpy as np

- import torch

- import torch.nn as nn

- import torch.nn.functional as F

- import torch.optim as optim

- import torchvision

- from torch.autograd.variable import Variable

- from torch.utils.tensorboard import SummaryWriter

- from torchvision import datasets, transforms

- from torch.utils.data import Dataset, DataLoader

- from torch.optim.lr_scheduler import ExponentialLR, LambdaLR

- from torchvision.models import ResNet50_Weights

- from tqdm import tqdm

- from classify_cfg import *

- mean = MEAN

- std = STD

- def get_dataset(base_dir='', input_size=160):

- dateset = dict()

- transform_train = transforms.Compose([

- # 分辨率重置为input_size

- transforms.Resize(input_size),

- transforms.RandomRotation(15),

- # 对加载的图像作归一化处理, 并裁剪为[input_sizexinput_sizex3]大小的图像(因为这图片像素不一致直接统一)

- transforms.CenterCrop(input_size),

- transforms.ToTensor(),

- transforms.Normalize(mean=mean, std=std)

- ])

- transform_val = transforms.Compose([

- transforms.Resize(input_size),

- transforms.RandomRotation(15),

- transforms.CenterCrop(input_size),

- transforms.ToTensor(),

- transforms.Normalize(mean=mean, std=std)

- ])

- base_dir_train = os.path.join(base_dir, 'train')

- train_dataset = datasets.ImageFolder(root=base_dir_train, transform=transform_train)

- # print("train_dataset=" + repr(train_dataset[1][0].size()))

- # print("train_dataset.class_to_idx=" + repr(train_dataset.class_to_idx))

- # print(train_dataset.classes)

- classes = train_dataset.classes

- # classes = train_dataset.class_to_idx

- classes_num = len(train_dataset.classes)

- base_dir_val = os.path.join(base_dir, 'val')

- val_dataset = datasets.ImageFolder(root=base_dir_val, transform=transform_val)

- dateset['train'] = train_dataset

- dateset['val'] = val_dataset

- return dateset, classes, classes_num

- def update_lr(epoch, epochs):

- """

- 假设开始的学习率lr是0.001,训练次数epochs是100

- 当epoch<33时是lr * 1

- 当33<=epoch<=66 时是lr * 0.5

- 当66<epoch时是lr * 0.1

- """

- if epoch == 0 or epochs // 3 > epoch:

- return 1

- elif (epochs // 3 * 2 >= epoch) and (epochs // 3 <= epoch):

- return 0.5

- else:

- return 0.1

3.训练模型

数据集导入好了以后,选择模型,选择优化器等等,然后开始训练。

mytrain.py

- import os

- import time

- import cv2

- import numpy as np

- import torch

- import torch.nn as nn

- import torch.optim as optim

- import torchvision

- from torch.autograd.variable import Variable

- from torch.utils.tensorboard import SummaryWriter

- from torch.utils.data import Dataset, DataLoader

- from torch.optim.lr_scheduler import ExponentialLR, LambdaLR

- from torchvision.models import ResNet50_Weights

- # from tqdm import tqdm

- from classify_cfg import *

- from tools import get_dataset, update_lr

- def train(model, dateset, epochs, batch_size, device, optimizer, scheduler, criterion, save_path):

- train_loader = DataLoader(dateset.get('train'), batch_size=batch_size, shuffle=True)

- val_loader = DataLoader(dateset.get('val'), batch_size=batch_size, shuffle=True)

- # 保存为tensorboard文件

- write = SummaryWriter(save_path)

- # 训练过程写入txt

- f = open(os.path.join(save_path, 'log.txt'), 'w', encoding='utf-8')

- best_acc = 0

- for epoch in range(epochs):

- train_correct = 0.0

- model.train()

- sum_loss = 0.0

- accuracy = -1

- total_num = len(train_loader.dataset)

- # print(total_num, len(train_loader))

- # loop = tqdm(enumerate(train_loader), total=len(train_loader))

- batch_count = 0

- for batch_idx, (data, target) in enumerate(train_loader):

- start_time = time.time()

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- optimizer.zero_grad()

- loss.backward()

- optimizer.step()

- print_loss = loss.data.item()

- sum_loss += print_loss

- train_predict = torch.max(output.data, 1)[1]

- if torch.cuda.is_available():

- train_correct += (train_predict.cuda() == target.cuda()).sum()

- else:

- train_correct += (train_predict == target).sum()

- accuracy = (train_correct / total_num) * 100

- # loop.set_description(f'Epoch [{epoch+1}/{epochs}]')

- # loop.set_postfix(loss=loss.item(), acc='{:.3f}'.format(accuracy))

- batch_count += len(data)

- end_time = time.time()

- s = f'Epoch:[{epoch+1}/{epochs}] Batch:[{batch_count}/{total_num}] train_acc: {"{:.2f}".format(accuracy)} ' f'train_loss: {"{:.3f}".format(loss.item())} time: {int((end_time-start_time)*1000)} ms'

- # print(f'Epoch:[{epoch+1}/{epochs}]', f'Batch:[{batch_count}/{total_num}]',

- # 'train_acc:', '{:.2f}'.format(accuracy), 'train_loss:', '{:.3f}'.format(loss.item()),

- # 'time:', f'{int((end_time-start_time)*1000)} ms')

- print(s)

- f.write(s+'\n')

- write.add_scalar('train_acc', accuracy, epoch)

- write.add_scalar('train_loss', loss.item(), epoch)

- # print(optimizer.param_groups[0]['lr'])

- scheduler.step()

- if best_acc < accuracy:

- best_acc = accuracy

- torch.save(model, os.path.join(save_path, 'best.pt'))

- if epoch+1 == epochs:

- torch.save(model, os.path.join(save_path, 'last.pt'))

- # 预测验证集

- # if (epoch+1) % 5 == 0 or epoch+1 == epochs:

- model.eval()

- test_loss = 0.0

- correct = 0.0

- total_num = len(val_loader.dataset)

- # print(total_num, len(val_loader))

- with torch.no_grad():

- for data, target in val_loader:

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- _, pred = torch.max(output.data, 1)

- if torch.cuda.is_available():

- correct += torch.sum(pred.cuda() == target.cuda())

- else:

- correct += torch.sum(pred == target)

- print_loss = loss.data.item()

- test_loss += print_loss

- acc = correct / total_num * 100

- avg_loss = test_loss / len(val_loader)

- s = f"val acc: {'{:.2f}'.format(acc)} val loss: {'{:.3f}'.format(avg_loss)}"

- # print('val acc: ', '{:.2f}'.format(acc), 'val loss: ', '{:.3f}'.format(avg_loss))

- print(s)

- f.write(s+'\n')

- write.add_scalar('val_acc', acc, epoch)

- write.add_scalar('val_loss', avg_loss, epoch)

- # loop.set_postfix(val_loss='{:.3f}'.format(avg_loss), val_acc='{:.3f}'.format(acc))

- f.close()

- if __name__ == '__main__':

- device = DEVICE

- epochs = EPOCHS

- batch_size = BATCH_SIZE

- input_size = INPUT_SIZE

- lr = LR

- # ---------------------------训练-------------------------------------

- # 图片的路径

- base_dir = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\dog_cat'

- # 保存的路径

- save_path = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\dog_cat_save'

- dateset, classes, classes_num = get_dataset(base_dir, input_size=input_size)

- # model = torchvision.models.resnet50(pretrained=True)

- model = torchvision.models.resnet50(weights=ResNet50_Weights.IMAGENET1K_V1)

- num_ftrs = model.fc.in_features

- model.fc = nn.Linear(num_ftrs, classes_num)

- model.to(DEVICE)

- # # 损失函数,交叉熵损失函数

- criteon = nn.CrossEntropyLoss()

- # 选择优化器

- optimizer = optim.SGD(model.parameters(), lr=lr)

- # 学习率更新

- # scheduler = ExponentialLR(optimizer, gamma=0.9)

- scheduler = LambdaLR(optimizer, lr_lambda=lambda epoch: update_lr(epoch, epochs))

- # 开始训练

- train(model, dateset, epochs, batch_size, device, optimizer, scheduler, criteon, save_path)

- # 将label保存起来

- with open(os.path.join(save_path, 'labels.txt'), 'w', encoding='utf-8') as f:

- f.write(f'{classes_num} {classes}')

训练结束以后,在保存路径下会得到下面的文件

最好的模型,最后一次的模型,标签的列表,训练的记录和tensorboard记录

在该路径下执行 tensorboard --logdir=.

然后在浏览器打开给出的地址,即可看到数据训练过程的绘图

4.对图片进行预测

考虑对于用户来说,用户是在网页或者手机上上传一张图片进行预测,所以这边是采用二进制数据。

mypredict.py

- import cv2

- import numpy as np

- import torch

- from classify_cfg import *

-

-

-

- def img_process(img_betys, img_size, device):

- img_arry = np.asarray(bytearray(img_betys), dtype='uint8')

- # im0 = cv2.imread(img_betys)

- im0 = cv2.imdecode(img_arry, cv2.IMREAD_COLOR)

- image = cv2.resize(im0, (img_size, img_size))

- image = np.float32(image) / 255.0

- image[:, :, ] -= np.float32(mean)

- image[:, :, ] /= np.float32(std)

- image = image.transpose((2, 0, 1))

- im = torch.from_numpy(image).unsqueeze(0)

- im = im.to(device)

- return im

- def predict(model_path, img, device):

- model = torch.load(model_path)

- model.to(device)

- model.eval()

- predicts = model(img)

- # print(predicts)

- _, preds = torch.max(predicts, 1)

- pred = torch.squeeze(preds)

- # print(pred)

- return pred

- if __name__ == '__main__':

- mean = MEAN

- std = STD

- device = DEVICE

- classes = ['狗', '猫']

- # # 预测

- model_path = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\dog_cat_save\best.pt'

- img_path = r'C:\Users\Administrator.DESKTOP-161KJQD\Desktop\save_dir\狗\000000.jpg'

- with open(img_path, 'rb') as f:

- img_betys = f.read()

- img =img_process(img_betys, 160, device)

- # print(img.shape)

- # print(img)

- pred = predict(model_path, img, device)

- print(classes[int(pred)])